HomeLab v2: Decentralised Data Warehousing

Building a decentralised data warehousing and compute infrastructure.

With my foray into HomeLab setups, it was time to evolve. This meant shifting my focus towards three concepts; Sustainability, Reliability and Scalability. Shoutout to my mentor @sukanto.sahoo!

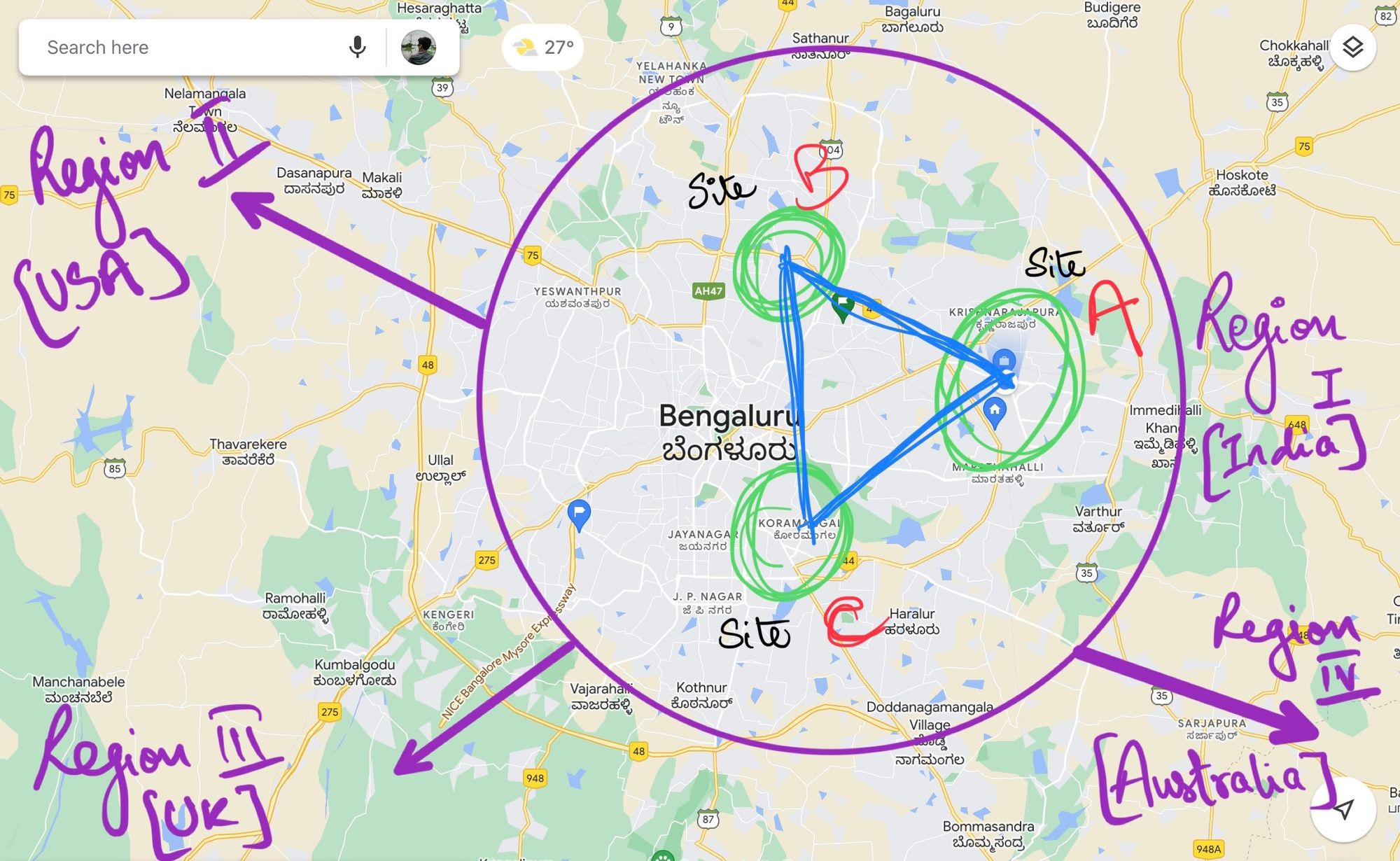

To achieve reliability, a multi region infrastructure deployment is required. These networks contain nodes spread across physical locations that would be connected via VPN. This would allow seamless failover in case of any disaster. Load balancing the IPs will be taken care of by the DNS provider. On the DNS layer, we can define rules to enable traffic routing to the nearest region. This should help minimise latency in theory.

Keeping data consistency is another challenge that is yet to be solved. After closing studying CAP theorem, I hope the solution I've put down further below works towards my goals.

Its like building a datacenter that's spread across multiple homes. In this case its between a few trusted friends. Will refer to homes as sites going forward!

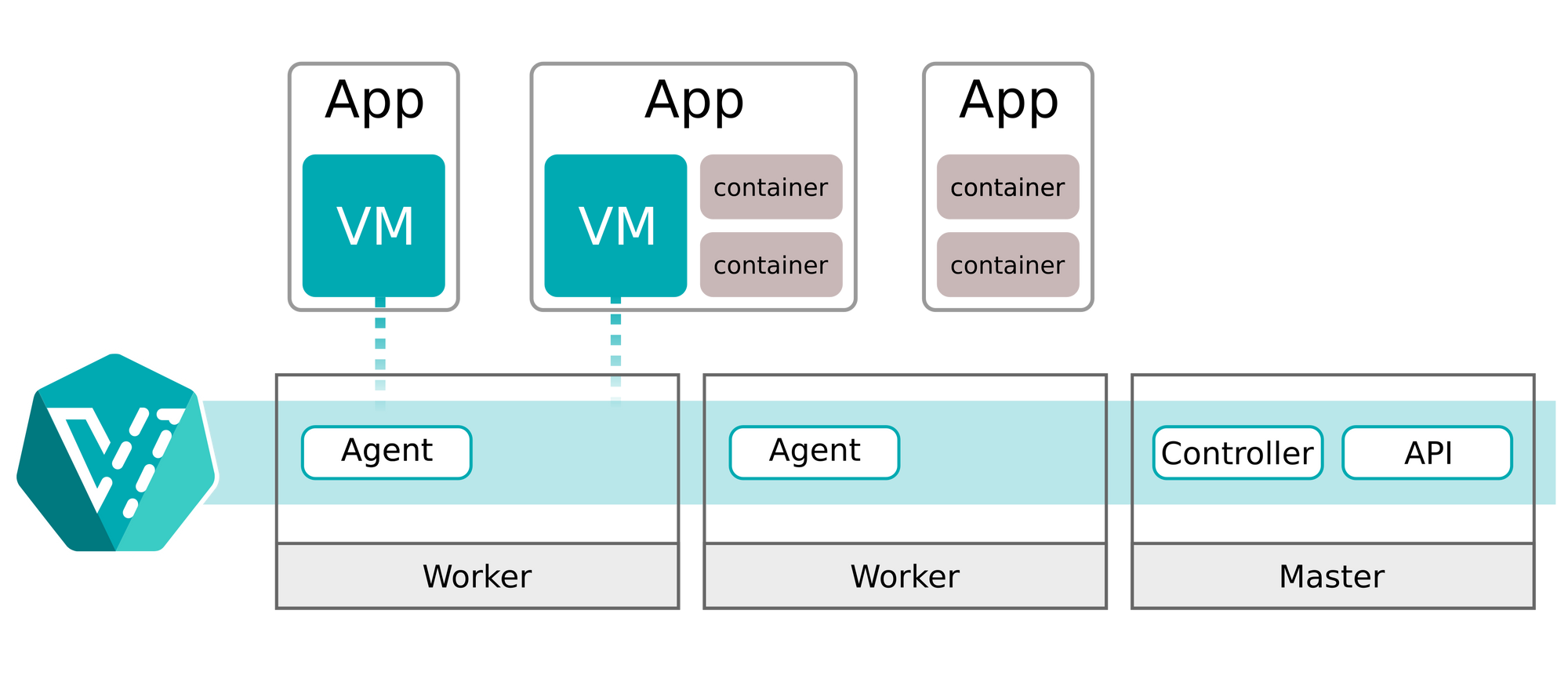

Part of having a reliable system involves it being scalable in nature. Nothing screams scalablility like kubernetes. Having a flavour of kubernetes with a hyper converged infrastructure (HCI) solution is paramount. VMware has a great setup but requires some capital to get going. Going open source was the only viable option at this point in time. This leaves me with openSUSE. Harvester is one such solution that works well and covers most of my needs.

Harvester is a cloud native HCI platform that is built on open source technologies, including Kubernetes, Longhorn, and KubeVirt. It is designed to help operators put together their virtual machine workloads with their Kubernetes workloads, making it easier to manage and deploy applications.

Kubernetes is fairly straight forward, nothing new. Its been around for a while. Its basically an open-source container orchestration system that automates deployment, scaling, and management of containerized applications. It is a portable, extensible, and scalable platform that can be used to deploy applications on a variety of infrastructure, including bare metal, virtual machines, and cloud platforms.

Longhorn is a distributed storage system that is designed to be highly available and scalable. It achieves this by replicating data across multiple nodes in a cluster. This means that if one node fails, the data is still available on the other nodes.

Longhorn fits into the CAP theorem by choosing to prioritize availability and partition tolerance over consistency. This means that Longhorn is always available, even if some of the nodes in the cluster fail. However, it is possible that some writes may be lost if a node fails before the data is replicated to another node.

KubeVirt is an open-source project that enables the management of virtual machines (VMs) on Kubernetes. It does this by extending the Kubernetes API to support VMs as first-class citizens. This means that you can create, manage, and deploy VMs using the same tools and concepts that you use for containers.

Apart from having fault tolerance in each site on a system level with RAID, data redundancy will exist across sites to aid disaster recovery.

To sustain the use of compute, I will be turning to be solar power plant. Part of its output will be used to run systems with my network rack.

The plan is have spare systems sitting in three homes connected via VPN. These systems would remain in sync and run workloads like our websites, cloud storage and home automation systems.

This is an exciting time to be in the tech industry, can't wait to see how this goes. Will update this space with time!

Update (19 July 2023):

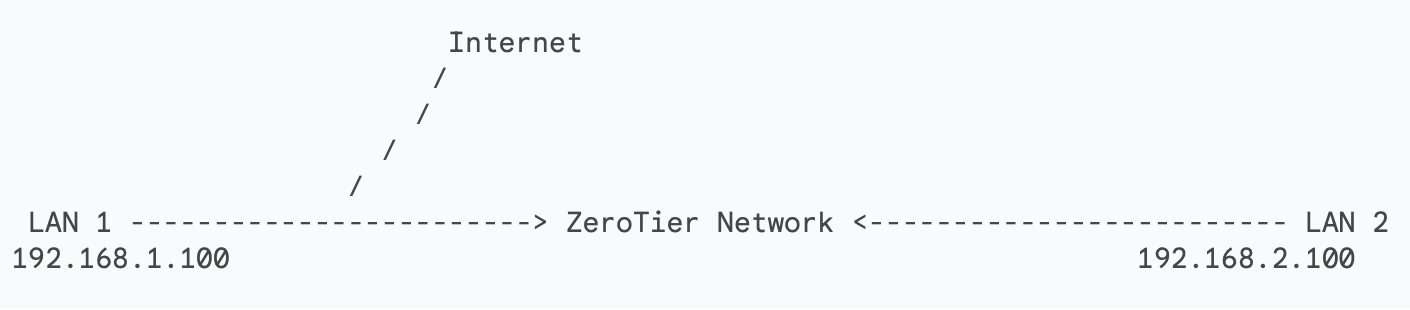

Obtained public IPs for each site. Parallely, exploring ZeroTier as a Peer-to-Peer VPN solution to enable connectivity between networks. This will be an alternative to routing traffic through the public IPs obatined (L2TP with IPsec or OpenVPN).

I was able to ping a system in another physical location using ZeroTier, will need to setup K8s nodes that talk to each and share the workload over this VPN. In the background, the packets are routed via UDP punch hole over the internet.

Update (6 Aug 2023):

With more research and testing, we decided to use a VPN server over our Static IPs and make use of HasiCorp's suite of software. Starting with Nomad. We are still evaluating its use over OpenSUSE's stack.

Final Update (11 Jan 2024):

We now have stable infrastructure with disaster recovery standards. Considering this project to be production ready, we have onboarded failover services for clients and have been testing its reliability with workloads.

The potential for generating revenue with the added benefit of support seems very feasible. We are actively engaging with clients to try new and exciting ideas!